It’s almost a month of learning AI and building software along with AI. From understanding prompt engineering, evaluations to implementing RAG and awaiting fine-tuning opportunities, it’s been both exciting and humbling. As a solo developer using Cursor to build projects from scratch, here are my observations about the reality of AI-powered coding.

The Trust Balance

There’s a constant juggle between trusting AI completely and maintaining healthy skepticism. My journey has been as follows:

Phase 1: Micromanagement – I started by reviewing every single diff AI produced, accepting changes one by one with careful scrutiny.

Phase 2: Blind Faith – Soon, it was the opposite extreme, trusting all AI-generated code and hitting Cursor’s “Keep all” option without hesitation.

Phase 3: Automation Addiction – Then came the “Auto-run” mode, where I didn’t even want to wait to execute commands manually.

The Reality Check – Within days, my codebase had devolved into a tangled mess of spaghetti code.

The lesson? AI is incredibly powerful, but it still needs human oversight. The sweet spot lies somewhere between paranoid review and blind acceptance.

The Knowledge Gap: When AI Writes Your Code

There’s a fundamental difference between writing code manually and accepting AI-generated code. When you hand-craft every line, you maintain a mental map of your entire system. But with AI assistance, something unexpected happens:

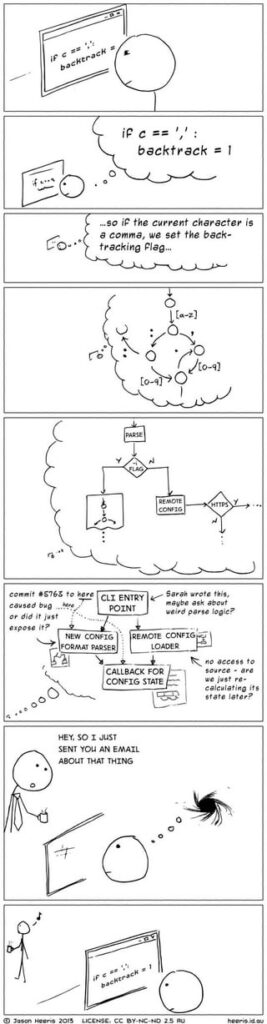

You can end up with files containing 1,000+ lines of code that you technically authored, yet you have no clear understanding of how functions interconnect or trace through the system. It’s like being the architect of a building you’ve never actually walked through. This creates a dangerous disconnect between authorship and understanding. The code works, but the developer loses their intuitive grasp of the system’s inner workings.

Remember this xkcd joke? What if you don’t have any context to recollect in the first place. That’s what happened to me very soon.

Lazy Prompts, High Expectations

Here’s an uncomfortable truth: we’re often lazy with our prompts while expecting AI to read our minds and deliver perfect results.

I started with overly generic prompts like “You are an expert prompt engineer” and so on. But soon the prompts change this “Fix this” and “Rewrite this function” and on. The reality is that AI output quality directly correlates with prompt quality. Garbage in, garbage out – but with a twist. AI is forgiving enough to produce something from vague prompts, which can mask the fact that we’re not communicating effectively.

Key Takeaways

Maintain Strategic Oversight – Don’t swing between extremes. Develop a middle path where you leverage AI’s speed while maintaining architectural awareness.

Preserve System Knowledge – Regularly step back and ensure you understand the high-level structure of what’s being built, even if AI handles the implementation details. I might end up using some AI system to generate better documentation for this.

Invest in Better Prompts – Treat prompt engineering as a skill worth developing. Clear, specific, context-rich prompts yield dramatically better results. And build the patience to give better prompts every single time you interact with AI.

With a lot more AI development to come, I’m sure this post might get outdated over time. Exciting times!

What’s been your experience with AI-assisted development? Have you found similar patterns in your own work?